Understanding Selection Bias: The Story of Abraham Wald

Introduction

Some people think hospitals are the most dangerous places on earth; more people die inside them than anywhere else. When people say things like that, they are technically right and may get fooled by what’s known as selection bias. A selection bias occurs when we look at information that is not fully representative of the data intended to be studied. As a result of the biased sample, we then draw a false conclusion.

Selection Bias

Selection bias happens when the sample of data you are analyzing does not represent the entire population. This can lead to misguided conclusions that don’t accurately reflect the reality of the situation. It’s important to recognize how selection bias can affect our understanding and decision-making.

Abraham Wald's Story

One story that explains the bias perhaps better than any other is that of Abraham Wald. Wald was a brilliant mathematician who fled to the United States in 1938 after being persecuted by the Nazis in Austria. During World War II, he became a member of the Statistical Research Group, an elite think tank to aid the American war effort against Nazi Germany.

The Missing Planes

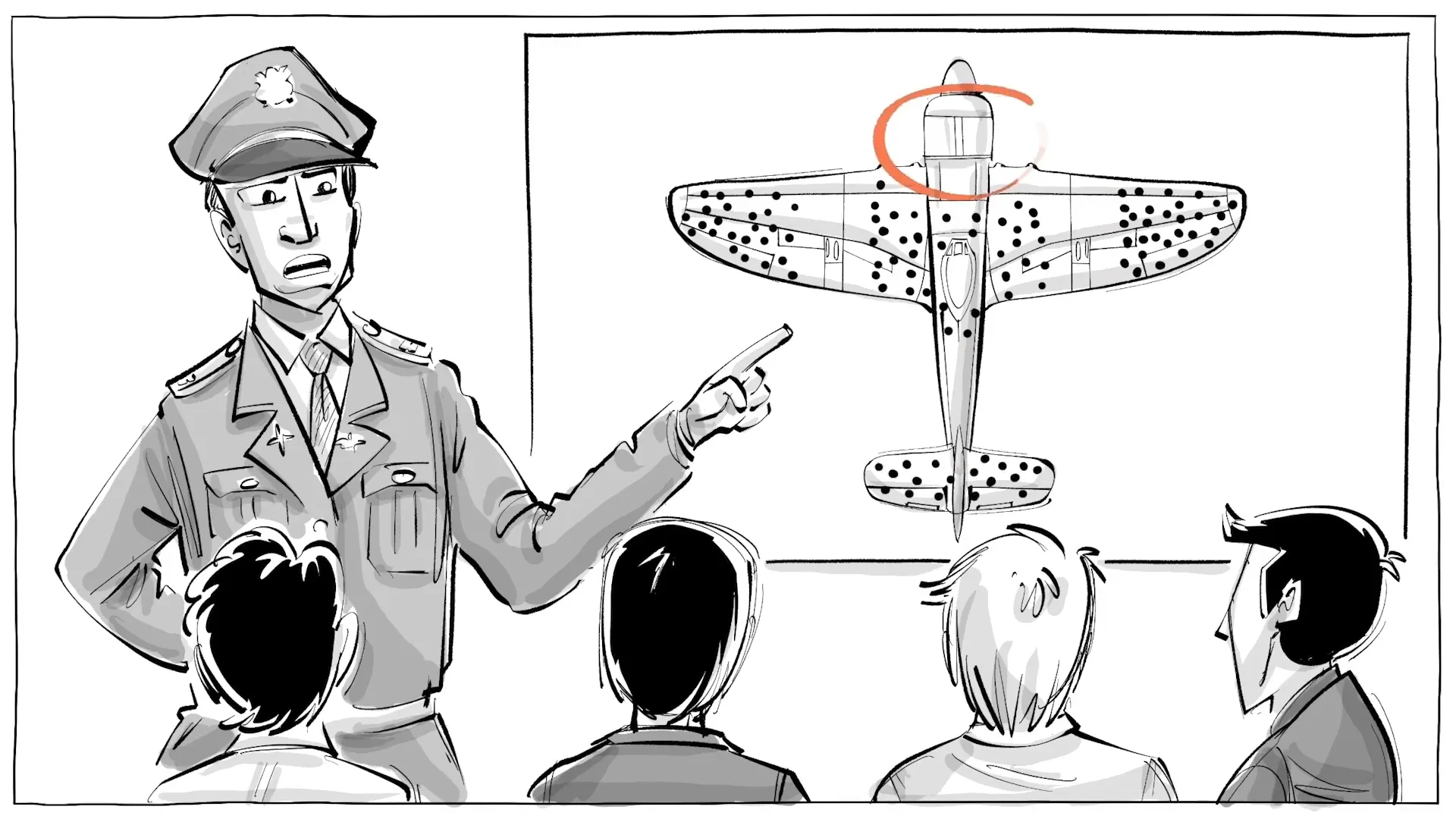

One day, the US Air Force came to Wald and his colleagues with a problem. Many of their planes got shot down due to a lack of armor. The officers presented Wald with data for all the aircraft that made it back from their missions. The planes had lots of holes in the body and wings but fewer below the engines. They asked Wald to compute the optimal protection by concentrating the armor where the planes were getting hit the most.

After studying the problem, Wald suggested something unexpected: the armor doesn’t go where the bullet holes are; it goes where the bullet holes aren’t. The officers didn’t understand because they were looking at a biased sample, while Wald wasn’t. He realized that to get representative data to analyze, he needed to include the missing holes, the missing planes, and the missing information.

The Simpson Paradox

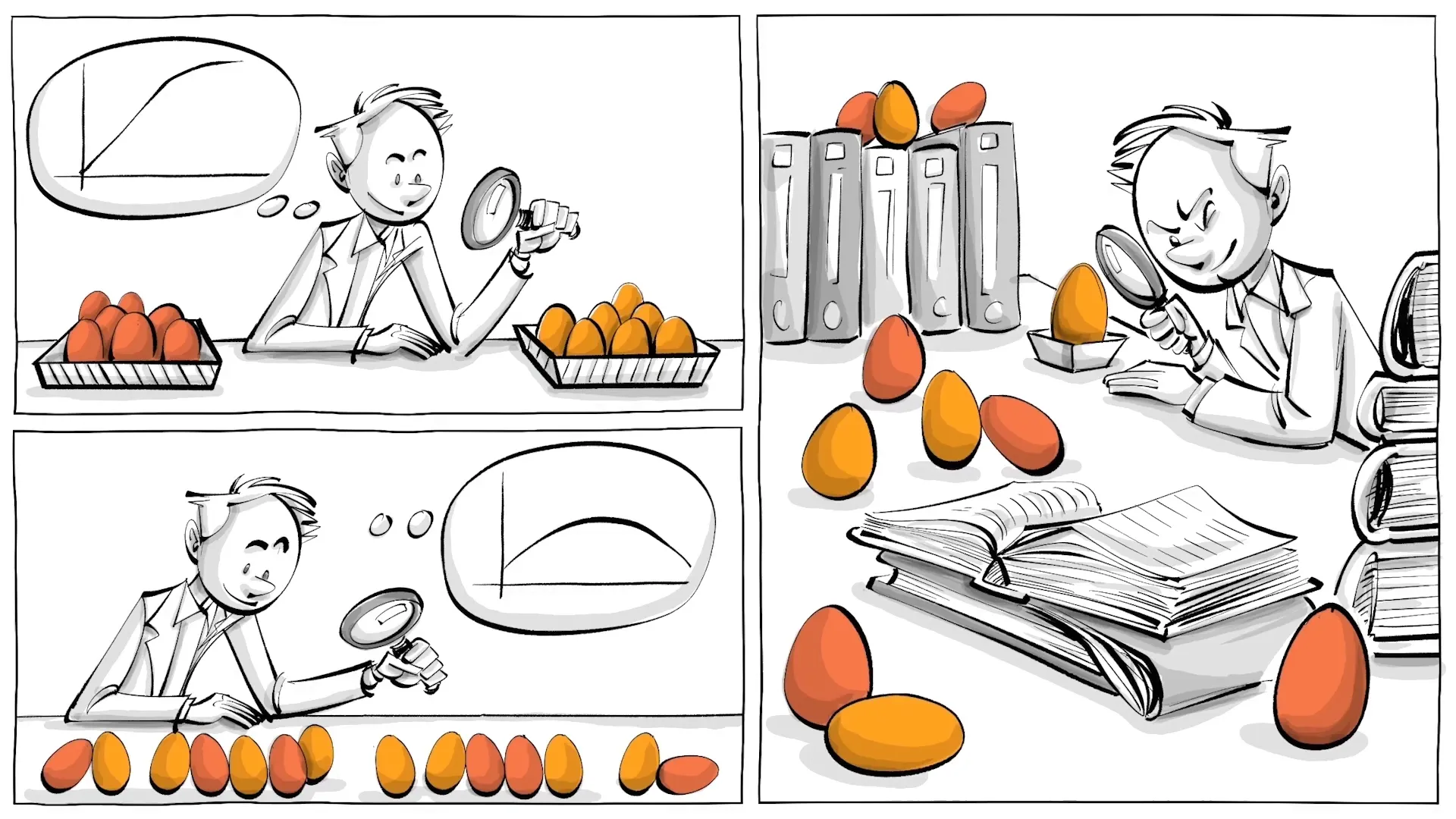

Selection bias isn’t just the result of missing information. The Simpson paradox is a phenomenon in which a trend appears in groups of data but then disappears when the groups are combined. It shows the importance of really understanding the data selected for analysis.

One famous example came from students applying to the University of California, Berkeley, in 1973. The data showed that males applying were more likely to be accepted than females, leading many to believe the institution was discriminating against women. However, when researchers dug deeper, they found that men had applied to less competitive departments with higher rates of admission, while women chose more competitive departments with fewer available spots. After correcting for this detail, the data showed a significant bias in favor of women—not men.

The Real Example

Wald’s insights remind us that planes shot in the engines were not analyzed. Similarly, women at Berkeley weren’t discriminated against; they simply picked more competitive classes. Moreover, people who die in hospitals are often already sick when they are admitted. This emphasizes the importance of considering all relevant factors and data in our analyses.

Conclusion

What are your thoughts? Is selection bias corrupting your decision-making? If it’s fooling you, how about the people behind the “research” you see being published in popular media? Did they really make sure they selected an unbiased, fully representative sample?

What do you think?

If you still don’t quite understand it, here is a simple challenge to experience it firsthand: go out of your house, knock at the doors of your next 10 neighbors, and ask those who open if they are afraid of strangers. After you are done, report your findings and explain what your research can tell us about your community.